Forecasting COVID-Affected Data

This notebook showcases some scalecast techniques that can be used to forecast on series heavily affected by the COVID-19 pandemic. Special thanks to Zohoor Nezhad Halafi for helping with this notebook! Connect with her on LinkedIn.

[1]:

import pandas as pd

import numpy as np

from scalecast.Forecaster import Forecaster

from scalecast.MVForecaster import MVForecaster

from scalecast.SeriesTransformer import SeriesTransformer

from scalecast.AnomalyDetector import AnomalyDetector

from scalecast.ChangepointDetector import ChangepointDetector

from scalecast.util import plot_reduction_errors, break_mv_forecaster, metrics

from scalecast import GridGenerator

from scalecast.multiseries import export_model_summaries

from scalecast.auxmodels import mlp_stack, auto_arima

from statsmodels.tsa.stattools import adfuller

import matplotlib.pyplot as plt

import seaborn as sns

import os

from tqdm.notebook import tqdm

import pickle

sns.set(rc={'figure.figsize':(12,8)})

[2]:

GridGenerator.get_example_grids()

[3]:

airline_series = ['Hou-Dom','IAH-DOM','IAH-Int']

fcst_horizon = 4

data = {

l:pd.read_csv(

os.path.join('data',l+'.csv')

) for l in airline_series

}

fdict = {

l:Forecaster(

y=df['PASSENGERS'],

current_dates=df['Date'],

future_dates=fcst_horizon,

) for l,df in data.items()

}

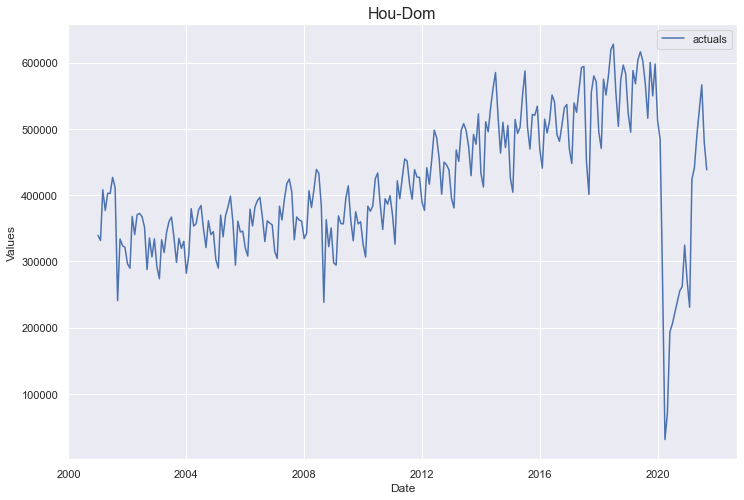

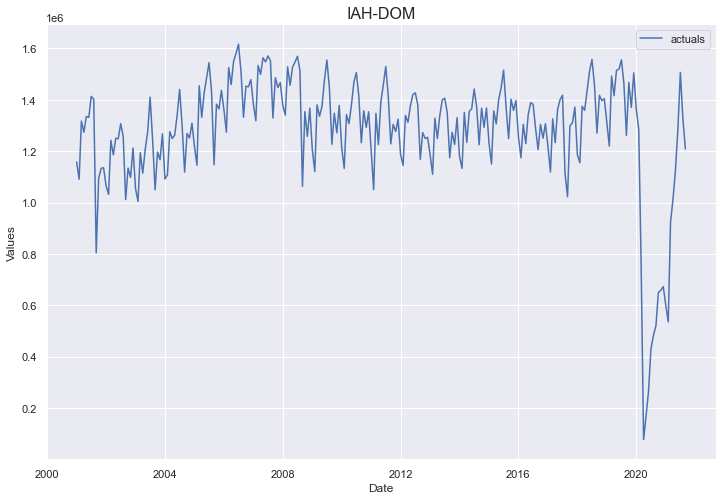

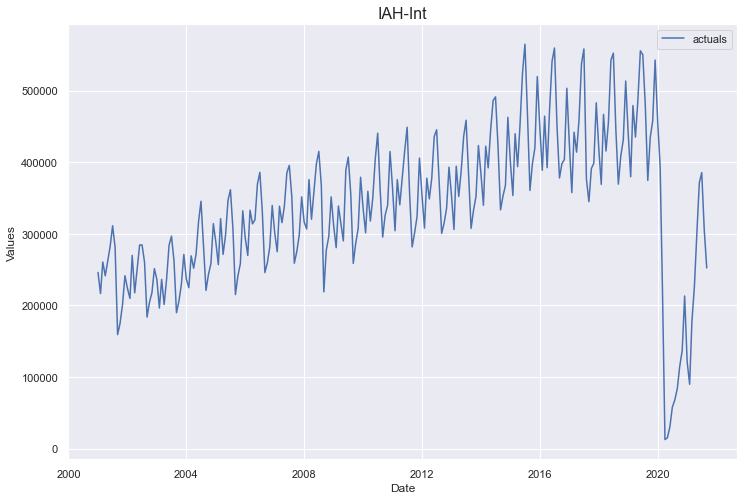

[4]:

for l, f in fdict.items():

f.plot()

plt.title(l,size=16)

plt.show()

Add Anomalies to Model

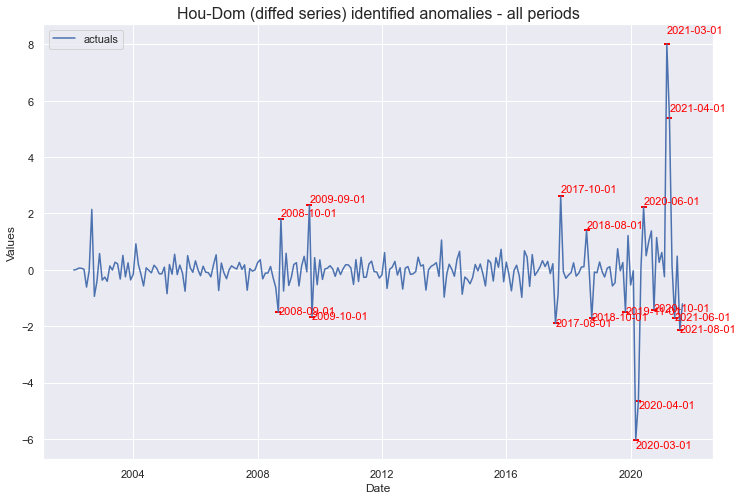

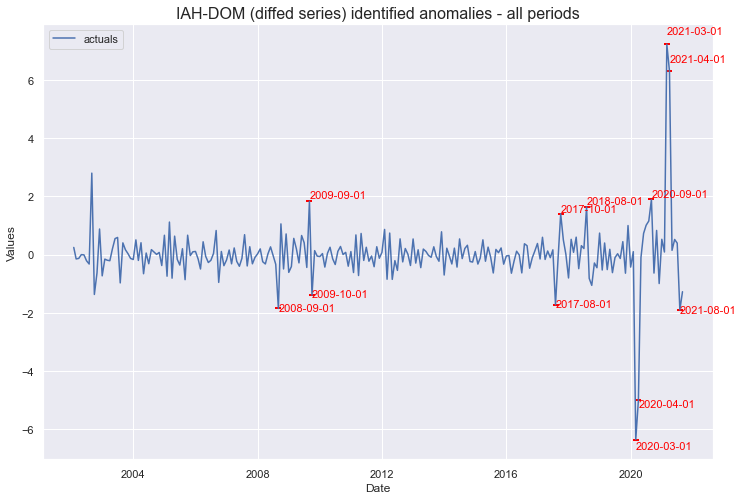

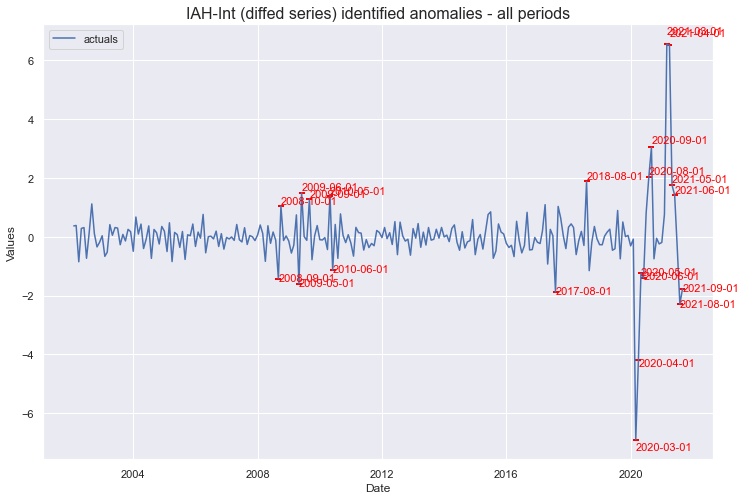

[5]:

# scan on whole dataset to compare

for l, f in fdict.items():

tr = SeriesTransformer(f)

tr.DiffTransform(1)

tr.DiffTransform(12)

f2 = tr.ScaleTransform()

ad = AnomalyDetector(f2)

ad.MonteCarloDetect_sliding(36,36)

ad.plot_anom()

plt.title(f'{l} (diffed series) identified anomalies - all periods',size=16)

plt.show()

scanning from obs 36 to obs 72

scanning from obs 72 to obs 108

scanning from obs 108 to obs 144

scanning from obs 144 to obs 180

scanning from obs 180 to obs 216

scanning from obs 216 to obs 252

scanning from obs 36 to obs 72

scanning from obs 72 to obs 108

scanning from obs 108 to obs 144

scanning from obs 144 to obs 180

scanning from obs 180 to obs 216

scanning from obs 216 to obs 252

scanning from obs 36 to obs 72

scanning from obs 72 to obs 108

scanning from obs 108 to obs 144

scanning from obs 144 to obs 180

scanning from obs 180 to obs 216

scanning from obs 216 to obs 252

Sept 11 2001

Recession sept 2008

Hurricane Harvey August 2017

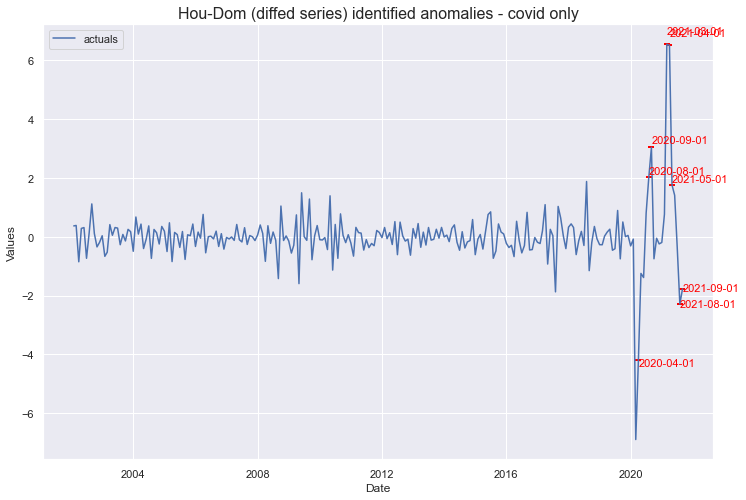

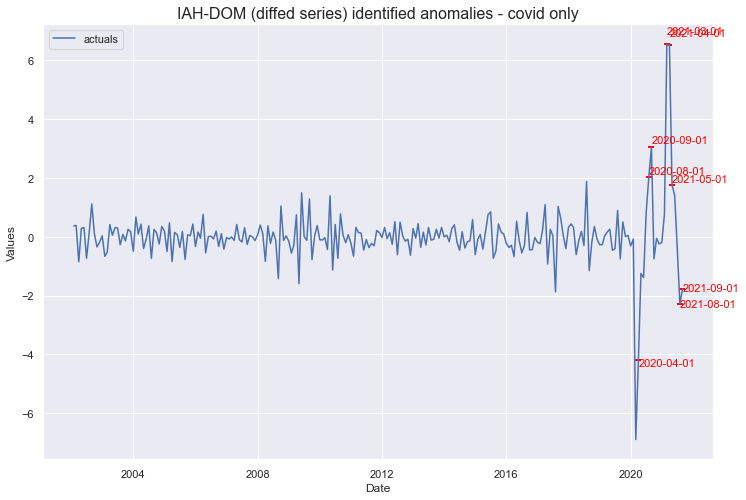

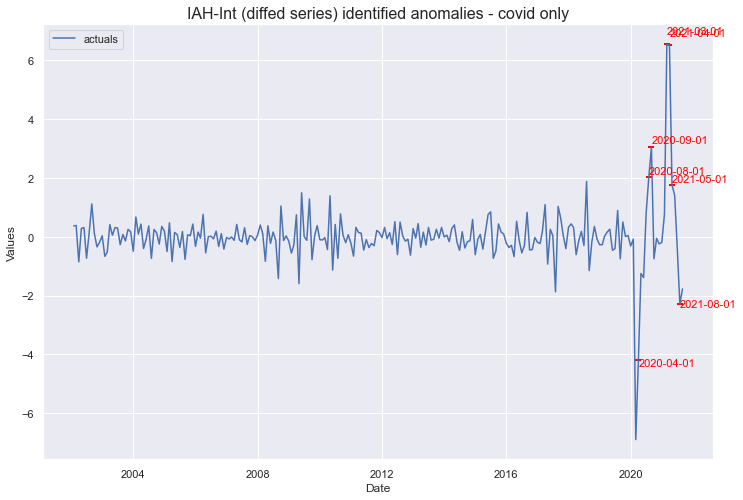

[6]:

# scan over only covid periods

for l, f in fdict.items():

ad.MonteCarloDetect(start_at='2020-04-01',stop_at='2021-09-01')

ad.plot_anom()

plt.title(f'{l} (diffed series) identified anomalies - covid only',size=16)

plt.show()

f = ad.WriteAnomtoXvars(f=f,drop_first=True)

f.add_other_regressor(start = '2001-09-01', end='2001-09-01', called='2001')

f.add_other_regressor(start = '2008-09-01', end='2008-09-01', called='Great Recession')

f.add_other_regressor(start = '2017-08-01', end='2017-08-01', called='Harvey')

fdict[l] = f

Add Changepoints to Model

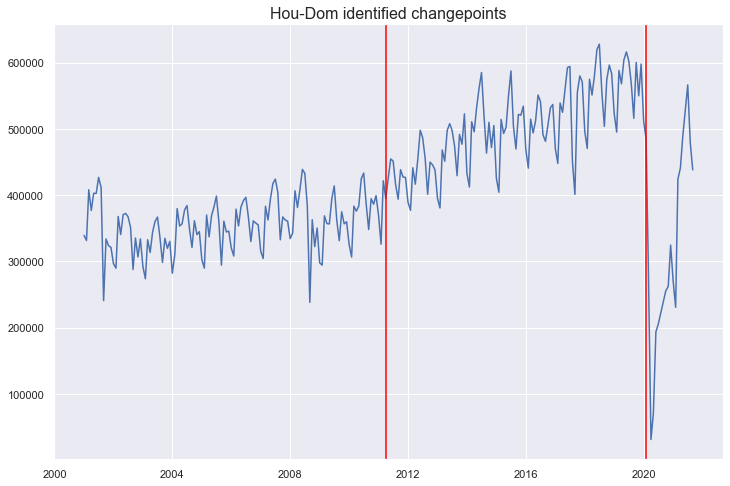

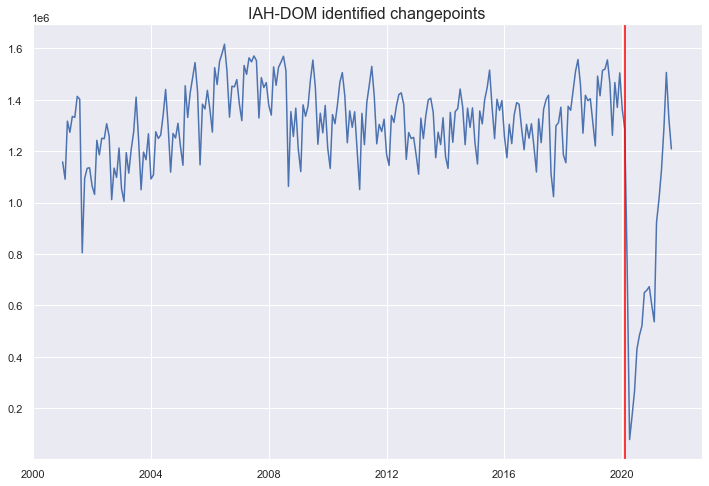

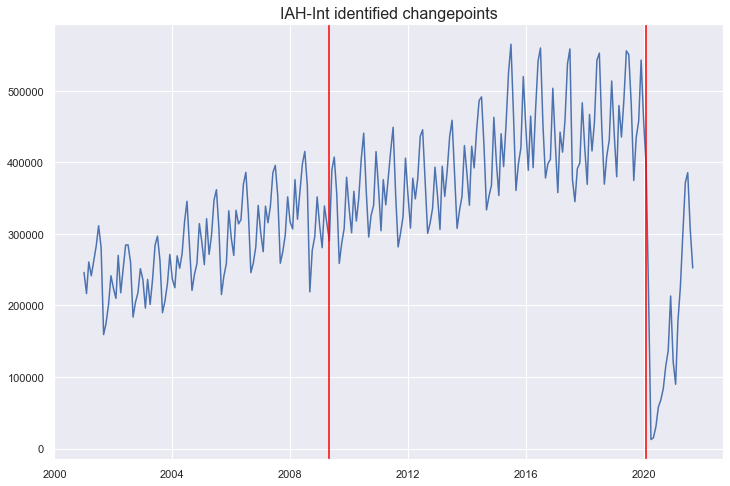

[7]:

for l, f in fdict.items():

cd = ChangepointDetector(f)

cd.DetectCPCUSUM()

cd.plot()

plt.title(f'{l} identified changepoints',size=16)

plt.show()

f = cd.WriteCPtoXvars()

fdict[l] = f

Choose Xvars

[8]:

regressors = pd.read_csv('data/Regressors.csv',parse_dates=['Date'],index_col=0)

for dt in f.future_dates:

regressors.loc[dt] = [0]*regressors.shape[1]

for c in regressors:

series = regressors[c]

if adfuller(series)[1] >= 0.01:

regressors[c] = regressors[c].diff()

regressors = regressors.fillna(0) # just for now

regressors.head()

[8]:

| Number of available seats Domestic and international level | Number of domestic flights | Number of International flights | Revenue Passenger-miles/Domestic | Revenue Passenger-miles/International | TSI/Passengers | TSI/Freight | Trade, Transportation, and Utilities | All employees Information | Employees In financial activities | ... | Employees in Leisure and Hospitality | All Employees,Education and Health Services | All Employee,Mining and Logging: Oil and Gas Extraction | Employees in Other Services | Unemployement Rate in Houston Area | Texas Business CyclesIndex | PCE on Durable goods(Billions of Dollars, Monthly, Seasonally Adjusted Annual Rate) | PCE on Recreational activities | Recession | WTO Oil Price | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Date | |||||||||||||||||||||

| 2001-01-01 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | 0.00 | 4.1 | 0.0 | 0.0 | 0.0 | 0 | 0.00 |

| 2001-02-01 | -7491574.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | -0.1 | 0.51 | 0.11 | 0.10 | ... | 0.57 | 0.81 | 0.36 | -0.13 | 3.9 | 0.3 | 22.4 | 0.0 | 0 | 0.03 |

| 2001-03-01 | 8507261.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5 | 0.2 | -0.12 | -0.12 | 0.13 | ... | 0.38 | 0.60 | -0.02 | 0.33 | 4.1 | 0.1 | -13.8 | 0.0 | 0 | -2.37 |

| 2001-04-01 | -1273374.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.6 | -1.5 | -0.23 | -0.12 | -0.05 | ... | -0.18 | 0.72 | -0.07 | 0.29 | 4.0 | 0.0 | -16.8 | 0.0 | 1 | 0.17 |

| 2001-05-01 | 2815795.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.5 | 1.5 | 0.57 | -0.58 | -0.01 | ... | 0.18 | 1.61 | 0.73 | -0.22 | 4.2 | -0.2 | 7.7 | 0.0 | 1 | 1.23 |

5 rows × 21 columns

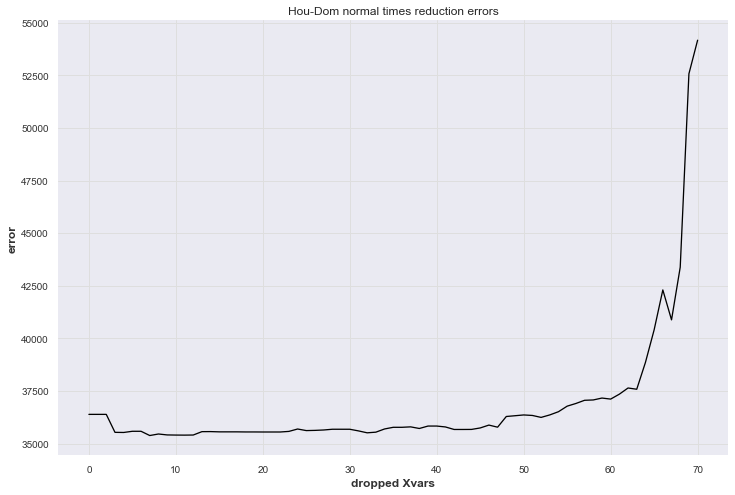

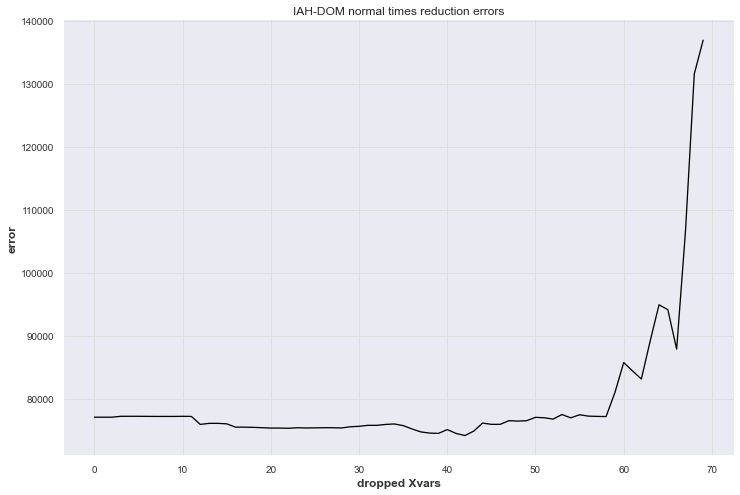

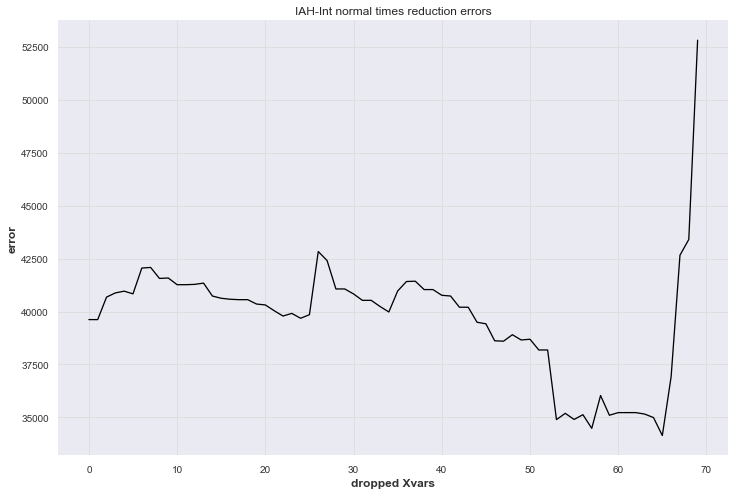

Variable Reduction

[9]:

dropped = pd.DataFrame(columns=fdict.keys())

# first to get regressors for regular times

for l, f in fdict.items():

print(f'reducing {l}')

f2 = f.deepcopy()

f2.add_ar_terms(36)

f.add_seasonal_regressors(

'month',

raw=False,

sincos=True

)

f2.add_time_trend()

f2.diff()

f2.ingest_Xvars_df(

regressors.reset_index(),

date_col='Date',

)

f2.reduce_Xvars(

method='pfi',

estimator='elasticnet',

cross_validate=True,

cvkwargs={'k':3},

dynamic_tuning=fcst_horizon, # optimize on fcst_horizon worth of predictions

overwrite=False,

)

for x in f2.pfi_dropped_vars:

dropped.loc[x,l] = 1

for x in f2.reduced_Xvars:

dropped.loc[x,l] = 0

plot_reduction_errors(f2)

plt.title(f'{l} normal times reduction errors',size=12)

plt.show()

reducing Hou-Dom

reducing IAH-DOM

reducing IAH-Int

Which variables were dropped most?

[10]:

dropped = dropped.fillna(0).drop('total times dropped',axis=1,errors='ignore')

dropped['total times dropped'] = dropped.sum(axis=1)

dropped.sort_values(['total times dropped'],ascending=False).head(25)

[10]:

| Hou-Dom | IAH-DOM | IAH-Int | total times dropped | |

|---|---|---|---|---|

| 2001 | 1 | 1 | 1 | 3 |

| PCE on Recreational activities | 1 | 1 | 1 | 3 |

| AR16 | 1 | 1 | 1 | 3 |

| AR11 | 1 | 1 | 1 | 3 |

| Anomaly_2021-05-01 | 1 | 1 | 1 | 3 |

| Unemployement Rate in Houston Area | 1 | 1 | 1 | 3 |

| AR33 | 0 | 1 | 1 | 2 |

| AR10 | 0 | 1 | 1 | 2 |

| AR5 | 0 | 1 | 1 | 2 |

| Harvey | 0 | 1 | 1 | 2 |

| WTO Oil Price | 0 | 1 | 1 | 2 |

| AR13 | 0 | 1 | 1 | 2 |

| AR15 | 0 | 1 | 1 | 2 |

| AR21 | 0 | 1 | 1 | 2 |

| TSI/Freight | 0 | 1 | 1 | 2 |

| Anomaly_2021-09-01 | 1 | 1 | 0 | 2 |

| Anomaly_2021-03-01 | 0 | 1 | 1 | 2 |

| AR3 | 0 | 1 | 1 | 2 |

| All Employees,Education and Health Services | 0 | 1 | 1 | 2 |

| Employees In financial activities | 0 | 1 | 1 | 2 |

| AR34 | 0 | 1 | 1 | 2 |

| AR2 | 0 | 1 | 1 | 2 |

| AR22 | 0 | 1 | 1 | 2 |

| AR27 | 0 | 1 | 1 | 2 |

| Anomaly_2020-08-01 | 0 | 1 | 1 | 2 |

Which variables were dropped least?

[11]:

dropped.sort_values(['total times dropped']).head(40)

[11]:

| Hou-Dom | IAH-DOM | IAH-Int | total times dropped | |

|---|---|---|---|---|

| AR36 | 0 | 0 | 0 | 0 |

| Revenue Passenger-miles/Domestic | 0 | 0 | 0 | 0 |

| AR18 | 0 | 0 | 0 | 0 |

| Number of domestic flights | 0 | 0 | 0 | 0 |

| Number of International flights | 0 | 0 | 0 | 0 |

| cp2 | 0 | 0 | 1 | 1 |

| AR17 | 0 | 0 | 1 | 1 |

| All employees Information | 0 | 0 | 1 | 1 |

| Employees in Other Services | 0 | 0 | 1 | 1 |

| Employees in Leisure and Hospitality | 0 | 0 | 1 | 1 |

| Anomaly_2020-04-01 | 0 | 0 | 1 | 1 |

| PCE on Durable goods(Billions of Dollars, Monthly, Seasonally Adjusted Annual Rate) | 0 | 0 | 1 | 1 |

| AR25 | 0 | 0 | 1 | 1 |

| AR31 | 0 | 0 | 1 | 1 |

| AR6 | 0 | 0 | 1 | 1 |

| Trade, Transportation, and Utilities | 0 | 0 | 1 | 1 |

| AR29 | 0 | 0 | 1 | 1 |

| AR24 | 0 | 0 | 1 | 1 |

| AR26 | 0 | 0 | 1 | 1 |

| AR19 | 0 | 0 | 1 | 1 |

| Revenue Passenger-miles/International | 0 | 0 | 1 | 1 |

| Recession | 0 | 0 | 1 | 1 |

| AR14 | 0 | 0 | 1 | 1 |

| Texas Business CyclesIndex | 0 | 0 | 1 | 1 |

| AR12 | 0 | 0 | 1 | 1 |

| AR1 | 0 | 0 | 1 | 1 |

| TSI/Passengers | 0 | 0 | 1 | 1 |

| AR30 | 0 | 0 | 1 | 1 |

| Number of available seats Domestic and international level | 0 | 0 | 1 | 1 |

| Anomaly_2021-03-01 | 0 | 1 | 1 | 2 |

| AR22 | 0 | 1 | 1 | 2 |

| All Employees,Education and Health Services | 0 | 1 | 1 | 2 |

| AR2 | 0 | 1 | 1 | 2 |

| Employees In financial activities | 0 | 1 | 1 | 2 |

| TSI/Freight | 0 | 1 | 1 | 2 |

| AR34 | 0 | 1 | 1 | 2 |

| Anomaly_2020-08-01 | 0 | 1 | 1 | 2 |

| AR21 | 0 | 1 | 1 | 2 |

| Anomaly_2021-09-01 | 1 | 1 | 0 | 2 |

| AR9 | 0 | 1 | 1 | 2 |

What about AR terms?

[12]:

ar_dropped = dropped.reset_index()

ar_dropped = ar_dropped.loc[ar_dropped['index'].str.contains('AR')]

ar_dropped['ar'] = ar_dropped['index'].apply(lambda x: int(x[2:]))

ar_dropped.sort_values('ar')

[12]:

| index | Hou-Dom | IAH-DOM | IAH-Int | total times dropped | ar | |

|---|---|---|---|---|---|---|

| 53 | AR1 | 0 | 0 | 1 | 1 | 1 |

| 60 | AR2 | 0 | 1 | 1 | 2 | 2 |

| 25 | AR3 | 0 | 1 | 1 | 2 | 3 |

| 9 | AR4 | 0 | 1 | 1 | 2 | 4 |

| 28 | AR5 | 0 | 1 | 1 | 2 | 5 |

| 37 | AR6 | 0 | 0 | 1 | 1 | 6 |

| 19 | AR7 | 0 | 1 | 1 | 2 | 7 |

| 24 | AR8 | 0 | 1 | 1 | 2 | 8 |

| 7 | AR9 | 0 | 1 | 1 | 2 | 9 |

| 27 | AR10 | 0 | 1 | 1 | 2 | 10 |

| 4 | AR11 | 1 | 1 | 1 | 3 | 11 |

| 66 | AR12 | 0 | 0 | 1 | 1 | 12 |

| 31 | AR13 | 0 | 1 | 1 | 2 | 13 |

| 58 | AR14 | 0 | 0 | 1 | 1 | 14 |

| 32 | AR15 | 0 | 1 | 1 | 2 | 15 |

| 3 | AR16 | 1 | 1 | 1 | 3 | 16 |

| 49 | AR17 | 0 | 0 | 1 | 1 | 17 |

| 51 | AR18 | 0 | 0 | 0 | 0 | 18 |

| 56 | AR19 | 0 | 0 | 1 | 1 | 19 |

| 13 | AR20 | 0 | 1 | 1 | 2 | 20 |

| 33 | AR21 | 0 | 1 | 1 | 2 | 21 |

| 61 | AR22 | 0 | 1 | 1 | 2 | 22 |

| 12 | AR23 | 0 | 1 | 1 | 2 | 23 |

| 70 | AR24 | 0 | 0 | 1 | 1 | 24 |

| 40 | AR25 | 0 | 0 | 1 | 1 | 25 |

| 55 | AR26 | 0 | 0 | 1 | 1 | 26 |

| 26 | AR27 | 0 | 1 | 1 | 2 | 27 |

| 10 | AR28 | 0 | 1 | 1 | 2 | 28 |

| 36 | AR29 | 0 | 0 | 1 | 1 | 29 |

| 62 | AR30 | 0 | 0 | 1 | 1 | 30 |

| 38 | AR31 | 0 | 0 | 1 | 1 | 31 |

| 14 | AR32 | 0 | 1 | 1 | 2 | 32 |

| 34 | AR33 | 0 | 1 | 1 | 2 | 33 |

| 52 | AR34 | 0 | 1 | 1 | 2 | 34 |

| 23 | AR35 | 0 | 1 | 1 | 2 | 35 |

| 69 | AR36 | 0 | 0 | 0 | 0 | 36 |

Subjectively make selections

[13]:

final_Xvars_selected = [

x for x in dropped.loc[dropped['total times dropped'] == 0].index if x in regressors

]

final_anom_selected = [

x for x in dropped.loc[dropped['total times dropped'] <= 1].index if x.startswith('Anomaly') or x in ('Harvey','2001','Great Recession') or x.startswith('cp')

]

lags = {

'Hou-Dom':[1,12,24,36],

'IAH-DOM':[1,12,24,36],

'IAH-Int':[1,12,24,36],

}

[14]:

for l, f in fdict.items():

f.drop_Xvars(*[x for x in f.get_regressor_names() if x not in final_anom_selected])

f.add_seasonal_regressors('month',raw=False,sincos=True)

f.diff()

Multivariate Forecasting

[15]:

mvfdict = fdict.copy()

for x in final_Xvars_selected:

if x in regressors:

mvfdict[x] = Forecaster(

y=regressors[x].values[:-4],

current_dates = regressors.index.values[:-4],

)

lags[x] = [1]

for l, f in fdict.items():

f.drop_Xvars(*[x for x in f.get_regressor_names() if x in regressors])

mvf = MVForecaster(*mvfdict.values(),names=mvfdict.keys())

mvf.add_optimizer_func(lambda x: x[0]*(1/3) + x[1]*(1/3) + x[2]*(1/3),'weighted') # first three series only get weight

mvf.set_optimize_on('weighted')

mvf.set_test_length(fcst_horizon)

mvf.set_validation_metric('mae')

mvf.set_validation_length(36)

mvf

[15]:

MVForecaster(

DateStartActuals=2001-01-01T00:00:00.000000000

DateEndActuals=2021-09-01T00:00:00.000000000

Freq=MS

N_actuals=249

N_series=6

SeriesNames=['Hou-Dom', 'IAH-DOM', 'IAH-Int', 'Number of International flights', 'Number of domestic flights', 'Revenue Passenger-miles/Domestic']

ForecastLength=4

Xvars=['Anomaly_2020-04-01', 'cp2', 'monthsin', 'monthcos']

TestLength=4

ValidationLength=36

ValidationMetric=mae

ForecastsEvaluated=[]

CILevel=0.95

BootstrapSamples=100

CurrentEstimator=mlr

OptimizeOn=weighted

)

[16]:

elasticnet = {

'alpha':[i/10 for i in range(1,21)],

'l1_ratio':[0,0.25,0.5,0.75,1],

'normalizer':['scale','minmax',None],

'lags':[lags],

}

knn = {

'n_neighbors':range(2,76),

'lags':[lags],

}

lightgbm = {

'n_estimators':[150,200,250],

'boosting_type':['gbdt','dart','goss'],

'max_depth':[1,2,3],

'learning_rate':[0.001,0.01,0.1],

'lags':[lags],

}

mlp = {

'activation':['relu','tanh'],

'hidden_layer_sizes':[(25,),(25,25,)],

'solver':['lbfgs','adam'],

'normalizer':['minmax','scale'],

'lags':[lags],

}

mlr = {

'normalizer':['scale','minmax',None],

'lags':[lags],

}

sgd={

'penalty':['l2','l1','elasticnet'],

'l1_ratio':[0,0.15,0.5,0.85,1],

'learning_rate':['invscaling','constant','optimal','adaptive'],

'lags':[lags],

}

svr={

'kernel':['linear'],

'C':[.5,1,2,3],

'epsilon':[0.01,0.1,0.5],

'lags':[lags],

}

xgboost = {

'n_estimators':[150,200,250],

'scale_pos_weight':[5,10],

'learning_rate':[0.1,0.2],

'gamma':[0,3,5],

'subsample':[0.8,0.9],

'lags':[lags],

}

[17]:

models = (

'knn',

'mlr',

'elasticnet',

'sgd',

'xgboost',

'lightgbm',

'mlp',

)

print('begining models cross validated')

for m in tqdm(models):

mvf.set_estimator(m)

mvf.ingest_grid(globals()[m])

mvf.limit_grid_size(10)

mvf.cross_validate(k=3,dynamic_tuning=fcst_horizon)

mvf.auto_forecast(call_me=f'{m}_cv_mv')

print('begining models tuned')

for m in tqdm(models):

mvf.set_estimator(m)

mvf.ingest_grid(globals()[m])

mvf.limit_grid_size(10)

mvf.tune(dynamic_tuning=fcst_horizon)

mvf.auto_forecast(call_me=f'{m}_tune_mv')

begining models cross validated

begining models tuned

[18]:

mvf.set_best_model(determine_best_by='LevelTestSetMAPE')

results = mvf.export('model_summaries')

results_sub = results.loc[results['Series'].isin(airline_series)]

results_sub[['Series','ModelNickname','LevelTestSetR2','LevelTestSetMAPE']]

[18]:

| Series | ModelNickname | LevelTestSetR2 | LevelTestSetMAPE | |

|---|---|---|---|---|

| 0 | Hou-Dom | elasticnet_tune_mv | -0.008851 | 0.092853 |

| 1 | Hou-Dom | knn_cv_mv | -0.122960 | 0.100203 |

| 2 | Hou-Dom | mlr_cv_mv | -4.865199 | 0.209266 |

| 3 | Hou-Dom | elasticnet_cv_mv | -0.046781 | 0.090712 |

| 4 | Hou-Dom | sgd_cv_mv | 0.044149 | 0.086127 |

| 5 | Hou-Dom | xgboost_cv_mv | -1.806345 | 0.130049 |

| 6 | Hou-Dom | lightgbm_cv_mv | -0.020278 | 0.087836 |

| 7 | Hou-Dom | mlp_cv_mv | -0.082069 | 0.087113 |

| 8 | Hou-Dom | knn_tune_mv | -0.731346 | 0.106951 |

| 9 | Hou-Dom | mlr_tune_mv | -4.865199 | 0.209266 |

| 10 | Hou-Dom | sgd_tune_mv | 0.069918 | 0.086680 |

| 11 | Hou-Dom | xgboost_tune_mv | -1.687364 | 0.129505 |

| 12 | Hou-Dom | lightgbm_tune_mv | 0.106759 | 0.086545 |

| 13 | Hou-Dom | mlp_tune_mv | 0.140452 | 0.080000 |

| 14 | IAH-DOM | elasticnet_tune_mv | -2.675066 | 0.130365 |

| 15 | IAH-DOM | knn_cv_mv | -1.521107 | 0.102719 |

| 16 | IAH-DOM | mlr_cv_mv | -0.700337 | 0.065628 |

| 17 | IAH-DOM | elasticnet_cv_mv | -3.282911 | 0.143407 |

| 18 | IAH-DOM | sgd_cv_mv | -3.305075 | 0.144816 |

| 19 | IAH-DOM | xgboost_cv_mv | -1.703854 | 0.112821 |

| 20 | IAH-DOM | lightgbm_cv_mv | -3.602143 | 0.150592 |

| 21 | IAH-DOM | mlp_cv_mv | -3.669990 | 0.150982 |

| 22 | IAH-DOM | knn_tune_mv | -0.765313 | 0.077754 |

| 23 | IAH-DOM | mlr_tune_mv | -0.700337 | 0.065628 |

| 24 | IAH-DOM | sgd_tune_mv | -3.141806 | 0.141990 |

| 25 | IAH-DOM | xgboost_tune_mv | -2.161605 | 0.126577 |

| 26 | IAH-DOM | lightgbm_tune_mv | -2.480593 | 0.125745 |

| 27 | IAH-DOM | mlp_tune_mv | -2.759960 | 0.128641 |

| 28 | IAH-Int | elasticnet_tune_mv | -0.019007 | 0.153717 |

| 29 | IAH-Int | knn_cv_mv | -0.411933 | 0.196223 |

| 30 | IAH-Int | mlr_cv_mv | -2.984908 | 0.283815 |

| 31 | IAH-Int | elasticnet_cv_mv | -0.175874 | 0.148786 |

| 32 | IAH-Int | sgd_cv_mv | -0.637143 | 0.207411 |

| 33 | IAH-Int | xgboost_cv_mv | -1.073559 | 0.225976 |

| 34 | IAH-Int | lightgbm_cv_mv | -0.132948 | 0.156983 |

| 35 | IAH-Int | mlp_cv_mv | -0.302144 | 0.156178 |

| 36 | IAH-Int | knn_tune_mv | -0.689363 | 0.192302 |

| 37 | IAH-Int | mlr_tune_mv | -2.984908 | 0.283815 |

| 38 | IAH-Int | sgd_tune_mv | -0.498934 | 0.200455 |

| 39 | IAH-Int | xgboost_tune_mv | -0.300637 | 0.173677 |

| 40 | IAH-Int | lightgbm_tune_mv | -0.715109 | 0.207723 |

| 41 | IAH-Int | mlp_tune_mv | -0.430382 | 0.193184 |

[19]:

models_to_stack = (

'knn_cv_mv',

'elasticnet_cv_mv',

'xgboost_cv_mv',

'lightgbm_cv_mv',

)

mlp_stack(

mvf,

model_nicknames = models_to_stack,

call_me='stacking_mv',

)

[20]:

mvf.set_best_model(determine_best_by='LevelTestSetMAPE')

results = mvf.export('model_summaries')

results_sub = results.loc[results['Series'].isin(airline_series)]

results_sub[['Series','ModelNickname','LevelTestSetR2','LevelTestSetMAPE']]

[20]:

| Series | ModelNickname | LevelTestSetR2 | LevelTestSetMAPE | |

|---|---|---|---|---|

| 0 | Hou-Dom | stacking_mv | 0.137636 | 0.062217 |

| 1 | Hou-Dom | knn_cv_mv | -0.122960 | 0.100203 |

| 2 | Hou-Dom | mlr_cv_mv | -4.865199 | 0.209266 |

| 3 | Hou-Dom | elasticnet_cv_mv | -0.046781 | 0.090712 |

| 4 | Hou-Dom | sgd_cv_mv | 0.044149 | 0.086127 |

| 5 | Hou-Dom | xgboost_cv_mv | -1.806345 | 0.130049 |

| 6 | Hou-Dom | lightgbm_cv_mv | -0.020278 | 0.087836 |

| 7 | Hou-Dom | mlp_cv_mv | -0.082069 | 0.087113 |

| 8 | Hou-Dom | knn_tune_mv | -0.731346 | 0.106951 |

| 9 | Hou-Dom | mlr_tune_mv | -4.865199 | 0.209266 |

| 10 | Hou-Dom | elasticnet_tune_mv | -0.008851 | 0.092853 |

| 11 | Hou-Dom | sgd_tune_mv | 0.069918 | 0.086680 |

| 12 | Hou-Dom | xgboost_tune_mv | -1.687364 | 0.129505 |

| 13 | Hou-Dom | lightgbm_tune_mv | 0.106759 | 0.086545 |

| 14 | Hou-Dom | mlp_tune_mv | 0.140452 | 0.080000 |

| 15 | IAH-DOM | stacking_mv | -1.030366 | 0.097983 |

| 16 | IAH-DOM | knn_cv_mv | -1.521107 | 0.102719 |

| 17 | IAH-DOM | mlr_cv_mv | -0.700337 | 0.065628 |

| 18 | IAH-DOM | elasticnet_cv_mv | -3.282911 | 0.143407 |

| 19 | IAH-DOM | sgd_cv_mv | -3.305075 | 0.144816 |

| 20 | IAH-DOM | xgboost_cv_mv | -1.703854 | 0.112821 |

| 21 | IAH-DOM | lightgbm_cv_mv | -3.602143 | 0.150592 |

| 22 | IAH-DOM | mlp_cv_mv | -3.669990 | 0.150982 |

| 23 | IAH-DOM | knn_tune_mv | -0.765313 | 0.077754 |

| 24 | IAH-DOM | mlr_tune_mv | -0.700337 | 0.065628 |

| 25 | IAH-DOM | elasticnet_tune_mv | -2.675066 | 0.130365 |

| 26 | IAH-DOM | sgd_tune_mv | -3.141806 | 0.141990 |

| 27 | IAH-DOM | xgboost_tune_mv | -2.161605 | 0.126577 |

| 28 | IAH-DOM | lightgbm_tune_mv | -2.480593 | 0.125745 |

| 29 | IAH-DOM | mlp_tune_mv | -2.759960 | 0.128641 |

| 30 | IAH-Int | stacking_mv | 0.725500 | 0.062671 |

| 31 | IAH-Int | knn_cv_mv | -0.411933 | 0.196223 |

| 32 | IAH-Int | mlr_cv_mv | -2.984908 | 0.283815 |

| 33 | IAH-Int | elasticnet_cv_mv | -0.175874 | 0.148786 |

| 34 | IAH-Int | sgd_cv_mv | -0.637143 | 0.207411 |

| 35 | IAH-Int | xgboost_cv_mv | -1.073559 | 0.225976 |

| 36 | IAH-Int | lightgbm_cv_mv | -0.132948 | 0.156983 |

| 37 | IAH-Int | mlp_cv_mv | -0.302144 | 0.156178 |

| 38 | IAH-Int | knn_tune_mv | -0.689363 | 0.192302 |

| 39 | IAH-Int | mlr_tune_mv | -2.984908 | 0.283815 |

| 40 | IAH-Int | elasticnet_tune_mv | -0.019007 | 0.153717 |

| 41 | IAH-Int | sgd_tune_mv | -0.498934 | 0.200455 |

| 42 | IAH-Int | xgboost_tune_mv | -0.300637 | 0.173677 |

| 43 | IAH-Int | lightgbm_tune_mv | -0.715109 | 0.207723 |

| 44 | IAH-Int | mlp_tune_mv | -0.430382 | 0.193184 |

Univariate Forecasting

[21]:

f1, f2, f3 = break_mv_forecaster(mvf)[:3]

fdict[airline_series[0]] = f1

fdict[airline_series[1]] = f2

fdict[airline_series[2]] = f3

[22]:

f1

[22]:

Forecaster(

DateStartActuals=2001-02-01T00:00:00.000000000

DateEndActuals=2021-09-01T00:00:00.000000000

Freq=MS

N_actuals=248

ForecastLength=4

Xvars=['Anomaly_2020-04-01', 'cp2', 'monthsin', 'monthcos']

Differenced=1

TestLength=4

ValidationLength=36

ValidationMetric=rmse

ForecastsEvaluated=['knn_cv_mv', 'mlr_cv_mv', 'elasticnet_cv_mv', 'sgd_cv_mv', 'xgboost_cv_mv', 'lightgbm_cv_mv', 'mlp_cv_mv', 'knn_tune_mv', 'mlr_tune_mv', 'elasticnet_tune_mv', 'sgd_tune_mv', 'xgboost_tune_mv', 'lightgbm_tune_mv', 'mlp_tune_mv', 'stacking_mv']

CILevel=0.95

BootstrapSamples=100

CurrentEstimator=None

)

[23]:

models = (

'knn',

'mlr',

'elasticnet',

'sgd',

'xgboost',

'lightgbm',

'mlp',

'hwes',

'arima',

'prophet',

'silverkite',

'theta',

)

for l, f in fdict.items():

print(f'processing {l}')

f.add_ar_terms(1)

f.add_AR_terms((3,6))

f.add_AR_terms((3,12))

auto_arima(

f,

m=12,

error_action='ignore',

)

f.manual_forecast(

order=f.auto_arima_params['order'],

seasonal_order=f.auto_arima_params['seasonal_order'],

Xvars = 'all',

call_me='auto_arima_anom',

)

f.tune_test_forecast(

models,

limit_grid_size=10,

cross_validate=True,

k=3,

dynamic_tuning=fcst_horizon,

suffix='_cv_uv',

)

f.tune_test_forecast(

models,

limit_grid_size=10,

cross_validate=False,

dynamic_tuning=fcst_horizon,

suffix='_tune_uv',

)

processing Hou-Dom

processing IAH-DOM

processing IAH-Int

[24]:

results = export_model_summaries(

fdict,

determine_best_by='LevelTestSetMAPE',

)

results[

[

'Series',

'ModelNickname',

'LevelTestSetMAPE',

]

].sort_values(

[

'Series',

'LevelTestSetMAPE',

]

).head(25)

[24]:

| Series | ModelNickname | LevelTestSetMAPE | |

|---|---|---|---|

| 0 | Hou-Dom | prophet_cv_uv | 0.024967 |

| 1 | Hou-Dom | arima_tune_uv | 0.031463 |

| 2 | Hou-Dom | arima_cv_uv | 0.031463 |

| 3 | Hou-Dom | hwes_tune_uv | 0.040938 |

| 4 | Hou-Dom | hwes_cv_uv | 0.040938 |

| 5 | Hou-Dom | auto_arima_anom | 0.041796 |

| 6 | Hou-Dom | prophet_tune_uv | 0.046722 |

| 7 | Hou-Dom | sgd_cv_uv | 0.054584 |

| 8 | Hou-Dom | sgd_tune_uv | 0.056646 |

| 9 | Hou-Dom | stacking_mv | 0.062217 |

| 10 | Hou-Dom | auto_arima | 0.071545 |

| 11 | Hou-Dom | mlr_tune_uv | 0.072219 |

| 12 | Hou-Dom | mlr_cv_uv | 0.072219 |

| 13 | Hou-Dom | elasticnet_cv_uv | 0.072386 |

| 14 | Hou-Dom | elasticnet_tune_uv | 0.073144 |

| 15 | Hou-Dom | mlp_cv_uv | 0.075766 |

| 16 | Hou-Dom | mlp_tune_uv | 0.079230 |

| 17 | Hou-Dom | mlp_tune_mv | 0.080000 |

| 18 | Hou-Dom | lightgbm_cv_uv | 0.084266 |

| 19 | Hou-Dom | sgd_cv_mv | 0.086127 |

| 20 | Hou-Dom | lightgbm_tune_mv | 0.086545 |

| 21 | Hou-Dom | sgd_tune_mv | 0.086680 |

| 22 | Hou-Dom | mlp_cv_mv | 0.087113 |

| 23 | Hou-Dom | lightgbm_tune_uv | 0.087297 |

| 24 | Hou-Dom | lightgbm_cv_mv | 0.087836 |

[25]:

models_to_stack = (

'knn_cv_uv',

'sgd_cv_uv',

'elasticnet_cv_uv',

'xgboost_cv_uv',

)

for l, f in fdict.items():

mlp_stack(

f,

model_nicknames=models_to_stack,

call_me='stacking_uv'

)

[26]:

for l, f in fdict.items():

# trains 120 total lstm models to get forecast intervals probabilistically

f.set_estimator('rnn')

f.proba_forecast(

lags=48,

layers_struct=[('LSTM',{'units':100,'dropout':0})]*2 + [('Dense',{'units':10})]*2,

epochs=8,

random_seed=42,

validation_split=0.2,

call_me = 'lstm',

)

f.set_estimator('combo')

f.manual_forecast(how='simple',call_me='avg')

f.manual_forecast(how='weighted',determine_best_by='LevelTestSetMAPE',call_me='weighted')

Epoch 1/8

5/5 [==============================] - 4s 220ms/step - loss: 0.4620 - val_loss: 0.2296

Epoch 2/8

5/5 [==============================] - 0s 50ms/step - loss: 0.1978 - val_loss: 0.0809

Epoch 3/8

5/5 [==============================] - 0s 50ms/step - loss: 0.1269 - val_loss: 0.0839

Epoch 4/8

5/5 [==============================] - 0s 53ms/step - loss: 0.1066 - val_loss: 0.0730

Epoch 5/8

5/5 [==============================] - 0s 55ms/step - loss: 0.1078 - val_loss: 0.0715

Epoch 6/8

5/5 [==============================] - 0s 57ms/step - loss: 0.1027 - val_loss: 0.0679

Epoch 7/8

5/5 [==============================] - 0s 57ms/step - loss: 0.1011 - val_loss: 0.0684

Epoch 8/8

5/5 [==============================] - 0s 57ms/step - loss: 0.1015 - val_loss: 0.0667

Epoch 1/8

5/5 [==============================] - 3s 216ms/step - loss: 0.4601 - val_loss: 0.3736

Epoch 2/8

5/5 [==============================] - 0s 53ms/step - loss: 0.3318 - val_loss: 0.2527

Epoch 3/8

5/5 [==============================] - 0s 53ms/step - loss: 0.2008 - val_loss: 0.1088

Epoch 4/8

5/5 [==============================] - 0s 57ms/step - loss: 0.1475 - val_loss: 0.0784

Epoch 5/8

5/5 [==============================] - 0s 58ms/step - loss: 0.1171 - val_loss: 0.0935

Epoch 6/8

5/5 [==============================] - 0s 58ms/step - loss: 0.1159 - val_loss: 0.0686

Epoch 7/8

5/5 [==============================] - 0s 59ms/step - loss: 0.1070 - val_loss: 0.0794

Epoch 8/8

5/5 [==============================] - 0s 58ms/step - loss: 0.1058 - val_loss: 0.0712

Epoch 1/8

5/5 [==============================] - 4s 212ms/step - loss: 0.4531 - val_loss: 0.3494

Epoch 2/8

5/5 [==============================] - 0s 56ms/step - loss: 0.3395 - val_loss: 0.2977

Epoch 3/8

5/5 [==============================] - 0s 62ms/step - loss: 0.2786 - val_loss: 0.2221

Epoch 4/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1931 - val_loss: 0.1028

Epoch 5/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1221 - val_loss: 0.1066

Epoch 6/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1128 - val_loss: 0.0671

Epoch 7/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1043 - val_loss: 0.0803

Epoch 8/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1072 - val_loss: 0.0695

Epoch 1/8

5/5 [==============================] - 3s 208ms/step - loss: 0.4293 - val_loss: 0.2660

Epoch 2/8

5/5 [==============================] - 0s 53ms/step - loss: 0.2586 - val_loss: 0.2127

Epoch 3/8

5/5 [==============================] - 0s 59ms/step - loss: 0.2098 - val_loss: 0.1606

Epoch 4/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1548 - val_loss: 0.0804

Epoch 5/8

5/5 [==============================] - 0s 60ms/step - loss: 0.1092 - val_loss: 0.0948

Epoch 6/8

5/5 [==============================] - 0s 62ms/step - loss: 0.1145 - val_loss: 0.0755

Epoch 7/8

5/5 [==============================] - 0s 61ms/step - loss: 0.1030 - val_loss: 0.0713

Epoch 8/8

5/5 [==============================] - 0s 60ms/step - loss: 0.1055 - val_loss: 0.0713

Epoch 1/8

5/5 [==============================] - 4s 209ms/step - loss: 0.4792 - val_loss: 0.2554

Epoch 2/8

5/5 [==============================] - 0s 61ms/step - loss: 0.2547 - val_loss: 0.2213

Epoch 3/8

5/5 [==============================] - 0s 62ms/step - loss: 0.2354 - val_loss: 0.1948

Epoch 4/8

5/5 [==============================] - 0s 63ms/step - loss: 0.2018 - val_loss: 0.1737

Epoch 5/8

5/5 [==============================] - 0s 61ms/step - loss: 0.1835 - val_loss: 0.1632

Epoch 6/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1655 - val_loss: 0.1375

Epoch 7/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1479 - val_loss: 0.1149

Epoch 8/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1307 - val_loss: 0.0922

Epoch 1/8

5/5 [==============================] - 3s 208ms/step - loss: 0.4198 - val_loss: 0.1965

Epoch 2/8

5/5 [==============================] - 0s 58ms/step - loss: 0.1934 - val_loss: 0.1305

Epoch 3/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1269 - val_loss: 0.1067

Epoch 4/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1175 - val_loss: 0.0894

Epoch 5/8

5/5 [==============================] - 0s 86ms/step - loss: 0.1071 - val_loss: 0.0720

Epoch 6/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1016 - val_loss: 0.0698

Epoch 7/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1033 - val_loss: 0.0673

Epoch 8/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1021 - val_loss: 0.0675

Epoch 1/8

5/5 [==============================] - 4s 212ms/step - loss: 0.3767 - val_loss: 0.2195

Epoch 2/8

5/5 [==============================] - 0s 59ms/step - loss: 0.1603 - val_loss: 0.1068

Epoch 3/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1155 - val_loss: 0.0934

Epoch 4/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1072 - val_loss: 0.0758

Epoch 5/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1023 - val_loss: 0.0729

Epoch 6/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1031 - val_loss: 0.0683

Epoch 7/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1002 - val_loss: 0.0644

Epoch 8/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1005 - val_loss: 0.0648

Epoch 1/8

5/5 [==============================] - 3s 219ms/step - loss: 0.4492 - val_loss: 0.3560

Epoch 2/8

5/5 [==============================] - 0s 60ms/step - loss: 0.3102 - val_loss: 0.2044

Epoch 3/8

5/5 [==============================] - 0s 71ms/step - loss: 0.2073 - val_loss: 0.1401

Epoch 4/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1334 - val_loss: 0.0936

Epoch 5/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1162 - val_loss: 0.0925

Epoch 6/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1104 - val_loss: 0.0837

Epoch 7/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1038 - val_loss: 0.0777

Epoch 8/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1062 - val_loss: 0.0714

Epoch 1/8

5/5 [==============================] - 4s 211ms/step - loss: 0.4593 - val_loss: 0.2216

Epoch 2/8

5/5 [==============================] - 0s 60ms/step - loss: 0.1930 - val_loss: 0.0755

Epoch 3/8

5/5 [==============================] - 0s 61ms/step - loss: 0.1168 - val_loss: 0.0891

Epoch 4/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1054 - val_loss: 0.0771

Epoch 5/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1080 - val_loss: 0.0758

Epoch 6/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1047 - val_loss: 0.0671

Epoch 7/8

5/5 [==============================] - 0s 70ms/step - loss: 0.0987 - val_loss: 0.0685

Epoch 8/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1010 - val_loss: 0.0668

Epoch 1/8

5/5 [==============================] - 3s 211ms/step - loss: 0.3480 - val_loss: 0.1881

Epoch 2/8

5/5 [==============================] - 0s 55ms/step - loss: 0.1496 - val_loss: 0.1327

Epoch 3/8

5/5 [==============================] - 0s 58ms/step - loss: 0.1256 - val_loss: 0.0812

Epoch 4/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1118 - val_loss: 0.0679

Epoch 5/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1041 - val_loss: 0.0699

Epoch 6/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1041 - val_loss: 0.0719

Epoch 7/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1011 - val_loss: 0.0718

Epoch 8/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1016 - val_loss: 0.0662

Epoch 1/8

5/5 [==============================] - 4s 278ms/step - loss: 0.3927 - val_loss: 0.1465

Epoch 2/8

5/5 [==============================] - 0s 62ms/step - loss: 0.1637 - val_loss: 0.1382

Epoch 3/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1637 - val_loss: 0.0927

Epoch 4/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1179 - val_loss: 0.0952

Epoch 5/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1103 - val_loss: 0.0755

Epoch 6/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1057 - val_loss: 0.0721

Epoch 7/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1038 - val_loss: 0.0688

Epoch 8/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1020 - val_loss: 0.0706

Epoch 1/8

5/5 [==============================] - 4s 254ms/step - loss: 0.3792 - val_loss: 0.2446

Epoch 2/8

5/5 [==============================] - 0s 69ms/step - loss: 0.2609 - val_loss: 0.2121

Epoch 3/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1907 - val_loss: 0.1374

Epoch 4/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1291 - val_loss: 0.0814

Epoch 5/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1152 - val_loss: 0.0888

Epoch 6/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1110 - val_loss: 0.0718

Epoch 7/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1024 - val_loss: 0.0704

Epoch 8/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1031 - val_loss: 0.0699

Epoch 1/8

5/5 [==============================] - 4s 263ms/step - loss: 0.4634 - val_loss: 0.1887

Epoch 2/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1608 - val_loss: 0.0798

Epoch 3/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1264 - val_loss: 0.0769

Epoch 4/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1073 - val_loss: 0.0790

Epoch 5/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1057 - val_loss: 0.0830

Epoch 6/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1065 - val_loss: 0.0696

Epoch 7/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1033 - val_loss: 0.0670

Epoch 8/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1027 - val_loss: 0.0732

Epoch 1/8

5/5 [==============================] - 5s 364ms/step - loss: 0.4697 - val_loss: 0.3228

Epoch 2/8

5/5 [==============================] - 0s 100ms/step - loss: 0.2379 - val_loss: 0.1059

Epoch 3/8

5/5 [==============================] - 0s 85ms/step - loss: 0.1345 - val_loss: 0.0838

Epoch 4/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1206 - val_loss: 0.0798

Epoch 5/8

5/5 [==============================] - 0s 89ms/step - loss: 0.1090 - val_loss: 0.0798

Epoch 6/8

5/5 [==============================] - 0s 88ms/step - loss: 0.1082 - val_loss: 0.0723

Epoch 7/8

5/5 [==============================] - 1s 146ms/step - loss: 0.1056 - val_loss: 0.0726

Epoch 8/8

5/5 [==============================] - 0s 100ms/step - loss: 0.1040 - val_loss: 0.0767

Epoch 1/8

5/5 [==============================] - 4s 223ms/step - loss: 0.3879 - val_loss: 0.2142

Epoch 2/8

5/5 [==============================] - 0s 62ms/step - loss: 0.1915 - val_loss: 0.1533

Epoch 3/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1613 - val_loss: 0.1085

Epoch 4/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1361 - val_loss: 0.0919

Epoch 5/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1199 - val_loss: 0.0895

Epoch 6/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1102 - val_loss: 0.0791

Epoch 7/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1039 - val_loss: 0.0719

Epoch 8/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1019 - val_loss: 0.0672

Epoch 1/8

5/5 [==============================] - 5s 252ms/step - loss: 0.4495 - val_loss: 0.2936

Epoch 2/8

5/5 [==============================] - 0s 71ms/step - loss: 0.2243 - val_loss: 0.0909

Epoch 3/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1233 - val_loss: 0.1121

Epoch 4/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1221 - val_loss: 0.0722

Epoch 5/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1044 - val_loss: 0.0728

Epoch 6/8

5/5 [==============================] - 0s 82ms/step - loss: 0.1050 - val_loss: 0.0705

Epoch 7/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1017 - val_loss: 0.0683

Epoch 8/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1035 - val_loss: 0.0710

Epoch 1/8

5/5 [==============================] - 3s 214ms/step - loss: 0.3382 - val_loss: 0.2025

Epoch 2/8

5/5 [==============================] - 0s 61ms/step - loss: 0.1671 - val_loss: 0.1272

Epoch 3/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1206 - val_loss: 0.0859

Epoch 4/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1111 - val_loss: 0.0765

Epoch 5/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1075 - val_loss: 0.0655

Epoch 6/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1050 - val_loss: 0.0676

Epoch 7/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1006 - val_loss: 0.0729

Epoch 8/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1005 - val_loss: 0.0657

Epoch 1/8

5/5 [==============================] - 3s 211ms/step - loss: 0.3966 - val_loss: 0.2425

Epoch 2/8

5/5 [==============================] - 0s 59ms/step - loss: 0.1635 - val_loss: 0.1310

Epoch 3/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1218 - val_loss: 0.0867

Epoch 4/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1174 - val_loss: 0.0791

Epoch 5/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1061 - val_loss: 0.0781

Epoch 6/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1054 - val_loss: 0.0676

Epoch 7/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1003 - val_loss: 0.0674

Epoch 8/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1002 - val_loss: 0.0673

Epoch 1/8

5/5 [==============================] - 5s 273ms/step - loss: 0.4536 - val_loss: 0.2352

Epoch 2/8

5/5 [==============================] - 0s 60ms/step - loss: 0.2195 - val_loss: 0.0839

Epoch 3/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1171 - val_loss: 0.0999

Epoch 4/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1121 - val_loss: 0.0683

Epoch 5/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1038 - val_loss: 0.0733

Epoch 6/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1033 - val_loss: 0.0707

Epoch 7/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1004 - val_loss: 0.0659

Epoch 8/8

5/5 [==============================] - 0s 72ms/step - loss: 0.0994 - val_loss: 0.0654

Epoch 1/8

5/5 [==============================] - 5s 226ms/step - loss: 0.4240 - val_loss: 0.2890

Epoch 2/8

5/5 [==============================] - 0s 59ms/step - loss: 0.2342 - val_loss: 0.1640

Epoch 3/8

5/5 [==============================] - 0s 61ms/step - loss: 0.1377 - val_loss: 0.0749

Epoch 4/8

5/5 [==============================] - 0s 61ms/step - loss: 0.1181 - val_loss: 0.0889

Epoch 5/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1039 - val_loss: 0.0670

Epoch 6/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1030 - val_loss: 0.0728

Epoch 7/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1036 - val_loss: 0.0721

Epoch 8/8

5/5 [==============================] - 0s 82ms/step - loss: 0.1012 - val_loss: 0.0663

Epoch 1/8

5/5 [==============================] - 4s 244ms/step - loss: 0.4628 - val_loss: 0.3032

Epoch 2/8

5/5 [==============================] - 0s 60ms/step - loss: 0.2330 - val_loss: 0.1009

Epoch 3/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1380 - val_loss: 0.1133

Epoch 4/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1185 - val_loss: 0.0787

Epoch 5/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1099 - val_loss: 0.0818

Epoch 6/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1080 - val_loss: 0.0658

Epoch 7/8

5/5 [==============================] - 0s 64ms/step - loss: 0.0994 - val_loss: 0.0718

Epoch 8/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1015 - val_loss: 0.0680

Epoch 1/8

5/5 [==============================] - 3s 215ms/step - loss: 0.4780 - val_loss: 0.3311

Epoch 2/8

5/5 [==============================] - 0s 63ms/step - loss: 0.2221 - val_loss: 0.1361

Epoch 3/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1313 - val_loss: 0.1162

Epoch 4/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1200 - val_loss: 0.0776

Epoch 5/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1116 - val_loss: 0.0669

Epoch 6/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1046 - val_loss: 0.0743

Epoch 7/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1020 - val_loss: 0.0691

Epoch 8/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1035 - val_loss: 0.0699

Epoch 1/8

5/5 [==============================] - 4s 253ms/step - loss: 0.4216 - val_loss: 0.1989

Epoch 2/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1909 - val_loss: 0.1219

Epoch 3/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1425 - val_loss: 0.0693

Epoch 4/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1113 - val_loss: 0.0675

Epoch 5/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1053 - val_loss: 0.0670

Epoch 6/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1019 - val_loss: 0.0664

Epoch 7/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1010 - val_loss: 0.0706

Epoch 8/8

5/5 [==============================] - 0s 73ms/step - loss: 0.0997 - val_loss: 0.0702

Epoch 1/8

5/5 [==============================] - 3s 218ms/step - loss: 0.4416 - val_loss: 0.2831

Epoch 2/8

5/5 [==============================] - 0s 65ms/step - loss: 0.2329 - val_loss: 0.1571

Epoch 3/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1408 - val_loss: 0.1208

Epoch 4/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1209 - val_loss: 0.0977

Epoch 5/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1094 - val_loss: 0.0827

Epoch 6/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1088 - val_loss: 0.0733

Epoch 7/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1029 - val_loss: 0.0725

Epoch 8/8

5/5 [==============================] - 0s 81ms/step - loss: 0.1052 - val_loss: 0.0700

Epoch 1/8

5/5 [==============================] - 4s 238ms/step - loss: 0.4120 - val_loss: 0.2061

Epoch 2/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1739 - val_loss: 0.1037

Epoch 3/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1385 - val_loss: 0.1082

Epoch 4/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1111 - val_loss: 0.0834

Epoch 5/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1076 - val_loss: 0.0746

Epoch 6/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1039 - val_loss: 0.0659

Epoch 7/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1000 - val_loss: 0.0690

Epoch 8/8

5/5 [==============================] - 0s 72ms/step - loss: 0.0999 - val_loss: 0.0656

Epoch 1/8

5/5 [==============================] - 4s 236ms/step - loss: 0.3936 - val_loss: 0.2807

Epoch 2/8

5/5 [==============================] - 0s 60ms/step - loss: 0.2506 - val_loss: 0.1960

Epoch 3/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1686 - val_loss: 0.0824

Epoch 4/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1244 - val_loss: 0.0909

Epoch 5/8

5/5 [==============================] - 0s 84ms/step - loss: 0.1101 - val_loss: 0.0748

Epoch 6/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1070 - val_loss: 0.0713

Epoch 7/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1025 - val_loss: 0.0664

Epoch 8/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1012 - val_loss: 0.0674

Epoch 1/8

5/5 [==============================] - 4s 236ms/step - loss: 0.4210 - val_loss: 0.2066

Epoch 2/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1626 - val_loss: 0.1242

Epoch 3/8

5/5 [==============================] - 0s 81ms/step - loss: 0.1269 - val_loss: 0.0778

Epoch 4/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1042 - val_loss: 0.0751

Epoch 5/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1032 - val_loss: 0.0694

Epoch 6/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1003 - val_loss: 0.0681

Epoch 7/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1008 - val_loss: 0.0663

Epoch 8/8

5/5 [==============================] - 0s 79ms/step - loss: 0.0997 - val_loss: 0.0655

Epoch 1/8

5/5 [==============================] - 4s 228ms/step - loss: 0.4332 - val_loss: 0.2339

Epoch 2/8

5/5 [==============================] - 0s 59ms/step - loss: 0.1974 - val_loss: 0.1167

Epoch 3/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1404 - val_loss: 0.1110

Epoch 4/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1149 - val_loss: 0.1094

Epoch 5/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1116 - val_loss: 0.0835

Epoch 6/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1125 - val_loss: 0.0689

Epoch 7/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1029 - val_loss: 0.0793

Epoch 8/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1042 - val_loss: 0.0726

Epoch 1/8

5/5 [==============================] - 3s 207ms/step - loss: 0.4108 - val_loss: 0.2654

Epoch 2/8

5/5 [==============================] - 0s 59ms/step - loss: 0.2124 - val_loss: 0.1372

Epoch 3/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1366 - val_loss: 0.0673

Epoch 4/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1074 - val_loss: 0.0849

Epoch 5/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1105 - val_loss: 0.0704

Epoch 6/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1043 - val_loss: 0.0697

Epoch 7/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1033 - val_loss: 0.0698

Epoch 8/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1030 - val_loss: 0.0681

Epoch 1/8

5/5 [==============================] - 4s 276ms/step - loss: 0.4871 - val_loss: 0.2833

Epoch 2/8

5/5 [==============================] - 0s 74ms/step - loss: 0.2129 - val_loss: 0.0989

Epoch 3/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1284 - val_loss: 0.0944

Epoch 4/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1180 - val_loss: 0.0703

Epoch 5/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1036 - val_loss: 0.0751

Epoch 6/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1037 - val_loss: 0.0702

Epoch 7/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1005 - val_loss: 0.0693

Epoch 8/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1014 - val_loss: 0.0664

Epoch 1/8

5/5 [==============================] - 4s 218ms/step - loss: 0.4543 - val_loss: 0.3302

Epoch 2/8

5/5 [==============================] - 0s 62ms/step - loss: 0.2753 - val_loss: 0.1645

Epoch 3/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1374 - val_loss: 0.1095

Epoch 4/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1161 - val_loss: 0.0818

Epoch 5/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1076 - val_loss: 0.0694

Epoch 6/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1018 - val_loss: 0.0734

Epoch 7/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1014 - val_loss: 0.0679

Epoch 8/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1014 - val_loss: 0.0704

Epoch 1/8

5/5 [==============================] - 4s 253ms/step - loss: 0.4470 - val_loss: 0.2336

Epoch 2/8

5/5 [==============================] - 0s 75ms/step - loss: 0.2225 - val_loss: 0.1406

Epoch 3/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1471 - val_loss: 0.0668

Epoch 4/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1129 - val_loss: 0.0906

Epoch 5/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1185 - val_loss: 0.0766

Epoch 6/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1116 - val_loss: 0.0668

Epoch 7/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1067 - val_loss: 0.0732

Epoch 8/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1045 - val_loss: 0.0728

Epoch 1/8

5/5 [==============================] - 5s 345ms/step - loss: 0.3600 - val_loss: 0.1618

Epoch 2/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1455 - val_loss: 0.0905

Epoch 3/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1219 - val_loss: 0.0847

Epoch 4/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1056 - val_loss: 0.0826

Epoch 5/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1071 - val_loss: 0.0687

Epoch 6/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1035 - val_loss: 0.0654

Epoch 7/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1008 - val_loss: 0.0682

Epoch 8/8

5/5 [==============================] - 0s 83ms/step - loss: 0.1010 - val_loss: 0.0656

Epoch 1/8

5/5 [==============================] - 4s 227ms/step - loss: 0.5048 - val_loss: 0.3985

Epoch 2/8

5/5 [==============================] - 0s 67ms/step - loss: 0.3078 - val_loss: 0.2169

Epoch 3/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1968 - val_loss: 0.1200

Epoch 4/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1295 - val_loss: 0.1103

Epoch 5/8

5/5 [==============================] - 0s 81ms/step - loss: 0.1228 - val_loss: 0.0818

Epoch 6/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1097 - val_loss: 0.0803

Epoch 7/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1051 - val_loss: 0.0740

Epoch 8/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1092 - val_loss: 0.0728

Epoch 1/8

5/5 [==============================] - 4s 235ms/step - loss: 0.4198 - val_loss: 0.2489

Epoch 2/8

5/5 [==============================] - 0s 68ms/step - loss: 0.2026 - val_loss: 0.1621

Epoch 3/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1482 - val_loss: 0.1045

Epoch 4/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1201 - val_loss: 0.0866

Epoch 5/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1117 - val_loss: 0.0732

Epoch 6/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1109 - val_loss: 0.0663

Epoch 7/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1006 - val_loss: 0.0688

Epoch 8/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1002 - val_loss: 0.0659

Epoch 1/8

5/5 [==============================] - 4s 218ms/step - loss: 0.4322 - val_loss: 0.2733

Epoch 2/8

5/5 [==============================] - 0s 71ms/step - loss: 0.2340 - val_loss: 0.1413

Epoch 3/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1437 - val_loss: 0.0971

Epoch 4/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1162 - val_loss: 0.0941

Epoch 5/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1137 - val_loss: 0.0844

Epoch 6/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1042 - val_loss: 0.0739

Epoch 7/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1054 - val_loss: 0.0710

Epoch 8/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1032 - val_loss: 0.0656

Epoch 1/8

5/5 [==============================] - 4s 283ms/step - loss: 0.4392 - val_loss: 0.1988

Epoch 2/8

5/5 [==============================] - 0s 61ms/step - loss: 0.1863 - val_loss: 0.1257

Epoch 3/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1447 - val_loss: 0.0869

Epoch 4/8

5/5 [==============================] - 0s 65ms/step - loss: 0.1090 - val_loss: 0.0933

Epoch 5/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1161 - val_loss: 0.0720

Epoch 6/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1033 - val_loss: 0.0663

Epoch 7/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1015 - val_loss: 0.0674

Epoch 8/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1003 - val_loss: 0.0641

Epoch 1/8

5/5 [==============================] - 3s 211ms/step - loss: 0.4296 - val_loss: 0.2323

Epoch 2/8

5/5 [==============================] - 0s 60ms/step - loss: 0.1646 - val_loss: 0.1102

Epoch 3/8

5/5 [==============================] - 0s 63ms/step - loss: 0.1151 - val_loss: 0.0960

Epoch 4/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1092 - val_loss: 0.0745

Epoch 5/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1056 - val_loss: 0.0773

Epoch 6/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1055 - val_loss: 0.0713

Epoch 7/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1032 - val_loss: 0.0714

Epoch 8/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1014 - val_loss: 0.0706

Epoch 1/8

5/5 [==============================] - 4s 270ms/step - loss: 0.4104 - val_loss: 0.1197

Epoch 2/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1379 - val_loss: 0.0930

Epoch 3/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1173 - val_loss: 0.0695

Epoch 4/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1065 - val_loss: 0.0669

Epoch 5/8

5/5 [==============================] - 0s 87ms/step - loss: 0.1066 - val_loss: 0.0765

Epoch 6/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1069 - val_loss: 0.0753

Epoch 7/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1034 - val_loss: 0.0696

Epoch 8/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1003 - val_loss: 0.0678

Epoch 1/8

5/5 [==============================] - 4s 233ms/step - loss: 0.3521 - val_loss: 0.1905

Epoch 2/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1519 - val_loss: 0.1034

Epoch 3/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1183 - val_loss: 0.0824

Epoch 4/8

5/5 [==============================] - 0s 85ms/step - loss: 0.1071 - val_loss: 0.0845

Epoch 5/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1071 - val_loss: 0.0708

Epoch 6/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1071 - val_loss: 0.0703

Epoch 7/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1056 - val_loss: 0.0693

Epoch 8/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1047 - val_loss: 0.0704

Epoch 1/8

5/5 [==============================] - 5s 292ms/step - loss: 0.5010 - val_loss: 0.3337

Epoch 2/8

5/5 [==============================] - 0s 68ms/step - loss: 0.2583 - val_loss: 0.1420

Epoch 3/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1443 - val_loss: 0.1009

Epoch 4/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1182 - val_loss: 0.1119

Epoch 5/8

5/5 [==============================] - 0s 82ms/step - loss: 0.1146 - val_loss: 0.0859

Epoch 6/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1122 - val_loss: 0.0973

Epoch 7/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1080 - val_loss: 0.0878

Epoch 8/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1078 - val_loss: 0.0931

Epoch 1/8

5/5 [==============================] - 5s 320ms/step - loss: 0.4257 - val_loss: 0.2465

Epoch 2/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1725 - val_loss: 0.1440

Epoch 3/8

5/5 [==============================] - 0s 94ms/step - loss: 0.1413 - val_loss: 0.1158

Epoch 4/8

5/5 [==============================] - 0s 89ms/step - loss: 0.1138 - val_loss: 0.1017

Epoch 5/8

5/5 [==============================] - 1s 108ms/step - loss: 0.1163 - val_loss: 0.0972

Epoch 6/8

5/5 [==============================] - 1s 169ms/step - loss: 0.1077 - val_loss: 0.0872

Epoch 7/8

5/5 [==============================] - 1s 109ms/step - loss: 0.1090 - val_loss: 0.0923

Epoch 8/8

5/5 [==============================] - 1s 106ms/step - loss: 0.1093 - val_loss: 0.0898

Epoch 1/8

5/5 [==============================] - 4s 229ms/step - loss: 0.4553 - val_loss: 0.1722

Epoch 2/8

5/5 [==============================] - 0s 62ms/step - loss: 0.1758 - val_loss: 0.1273

Epoch 3/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1420 - val_loss: 0.0989

Epoch 4/8

5/5 [==============================] - 1s 117ms/step - loss: 0.1249 - val_loss: 0.1008

Epoch 5/8

5/5 [==============================] - 1s 188ms/step - loss: 0.1110 - val_loss: 0.1013

Epoch 6/8

5/5 [==============================] - 0s 92ms/step - loss: 0.1116 - val_loss: 0.0906

Epoch 7/8

5/5 [==============================] - 0s 91ms/step - loss: 0.1110 - val_loss: 0.0879

Epoch 8/8

5/5 [==============================] - 0s 90ms/step - loss: 0.1095 - val_loss: 0.0937

Epoch 1/8

5/5 [==============================] - 3s 217ms/step - loss: 0.4561 - val_loss: 0.2289

Epoch 2/8

5/5 [==============================] - 0s 74ms/step - loss: 0.2222 - val_loss: 0.1831

Epoch 3/8

5/5 [==============================] - 0s 86ms/step - loss: 0.1762 - val_loss: 0.1572

Epoch 4/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1512 - val_loss: 0.1140

Epoch 5/8

5/5 [==============================] - 0s 84ms/step - loss: 0.1244 - val_loss: 0.0985

Epoch 6/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1089 - val_loss: 0.0924

Epoch 7/8

5/5 [==============================] - 0s 84ms/step - loss: 0.1091 - val_loss: 0.0890

Epoch 8/8

5/5 [==============================] - 0s 107ms/step - loss: 0.1119 - val_loss: 0.0891

Epoch 1/8

5/5 [==============================] - 4s 220ms/step - loss: 0.4510 - val_loss: 0.2694

Epoch 2/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1826 - val_loss: 0.1577

Epoch 3/8

5/5 [==============================] - 0s 66ms/step - loss: 0.1341 - val_loss: 0.0952

Epoch 4/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1209 - val_loss: 0.1013

Epoch 5/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1107 - val_loss: 0.0908

Epoch 6/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1085 - val_loss: 0.0910

Epoch 7/8

5/5 [==============================] - 0s 64ms/step - loss: 0.1070 - val_loss: 0.0860

Epoch 8/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1061 - val_loss: 0.0876

Epoch 1/8

5/5 [==============================] - 3s 222ms/step - loss: 0.4646 - val_loss: 0.2459

Epoch 2/8

5/5 [==============================] - 0s 62ms/step - loss: 0.1769 - val_loss: 0.1648

Epoch 3/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1333 - val_loss: 0.1120

Epoch 4/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1254 - val_loss: 0.0942

Epoch 5/8

5/5 [==============================] - 0s 87ms/step - loss: 0.1122 - val_loss: 0.1037

Epoch 6/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1151 - val_loss: 0.0904

Epoch 7/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1084 - val_loss: 0.0922

Epoch 8/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1091 - val_loss: 0.0873

Epoch 1/8

5/5 [==============================] - 4s 220ms/step - loss: 0.4846 - val_loss: 0.3335

Epoch 2/8

5/5 [==============================] - 1s 124ms/step - loss: 0.2892 - val_loss: 0.2251

Epoch 3/8

5/5 [==============================] - 0s 84ms/step - loss: 0.2149 - val_loss: 0.1717

Epoch 4/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1393 - val_loss: 0.1104

Epoch 5/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1258 - val_loss: 0.0953

Epoch 6/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1101 - val_loss: 0.0956

Epoch 7/8

5/5 [==============================] - 0s 85ms/step - loss: 0.1113 - val_loss: 0.0926

Epoch 8/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1102 - val_loss: 0.0887

Epoch 1/8

5/5 [==============================] - 3s 219ms/step - loss: 0.5529 - val_loss: 0.3860

Epoch 2/8

5/5 [==============================] - 0s 64ms/step - loss: 0.3421 - val_loss: 0.2278

Epoch 3/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1963 - val_loss: 0.1094

Epoch 4/8

5/5 [==============================] - 0s 82ms/step - loss: 0.1275 - val_loss: 0.1176

Epoch 5/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1247 - val_loss: 0.0981

Epoch 6/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1137 - val_loss: 0.0946

Epoch 7/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1116 - val_loss: 0.0886

Epoch 8/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1086 - val_loss: 0.0869

Epoch 1/8

5/5 [==============================] - 4s 220ms/step - loss: 0.4849 - val_loss: 0.3383

Epoch 2/8

5/5 [==============================] - 0s 66ms/step - loss: 0.2608 - val_loss: 0.1820

Epoch 3/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1520 - val_loss: 0.1439

Epoch 4/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1365 - val_loss: 0.1055

Epoch 5/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1168 - val_loss: 0.1007

Epoch 6/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1155 - val_loss: 0.0919

Epoch 7/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1077 - val_loss: 0.0887

Epoch 8/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1076 - val_loss: 0.0874

Epoch 1/8

5/5 [==============================] - 4s 219ms/step - loss: 0.5882 - val_loss: 0.3979

Epoch 2/8

5/5 [==============================] - 0s 70ms/step - loss: 0.3944 - val_loss: 0.3196

Epoch 3/8

5/5 [==============================] - 0s 72ms/step - loss: 0.3007 - val_loss: 0.2020

Epoch 4/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1702 - val_loss: 0.1035

Epoch 5/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1305 - val_loss: 0.0974

Epoch 6/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1117 - val_loss: 0.0956

Epoch 7/8

5/5 [==============================] - 0s 71ms/step - loss: 0.1145 - val_loss: 0.0932

Epoch 8/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1084 - val_loss: 0.0984

Epoch 1/8

5/5 [==============================] - 3s 217ms/step - loss: 0.4444 - val_loss: 0.2905

Epoch 2/8

5/5 [==============================] - 0s 65ms/step - loss: 0.2042 - val_loss: 0.1075

Epoch 3/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1278 - val_loss: 0.0964

Epoch 4/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1193 - val_loss: 0.1031

Epoch 5/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1146 - val_loss: 0.0865

Epoch 6/8

5/5 [==============================] - 0s 69ms/step - loss: 0.1068 - val_loss: 0.0916

Epoch 7/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1072 - val_loss: 0.0880

Epoch 8/8

5/5 [==============================] - 0s 70ms/step - loss: 0.1075 - val_loss: 0.0876

Epoch 1/8

5/5 [==============================] - 3s 239ms/step - loss: 0.5037 - val_loss: 0.3601

Epoch 2/8

5/5 [==============================] - 0s 70ms/step - loss: 0.2783 - val_loss: 0.2187

Epoch 3/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1971 - val_loss: 0.1513

Epoch 4/8

5/5 [==============================] - 0s 87ms/step - loss: 0.1363 - val_loss: 0.1029

Epoch 5/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1162 - val_loss: 0.0950

Epoch 6/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1129 - val_loss: 0.0976

Epoch 7/8

5/5 [==============================] - 0s 83ms/step - loss: 0.1109 - val_loss: 0.0891

Epoch 8/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1092 - val_loss: 0.0878

Epoch 1/8

5/5 [==============================] - 4s 230ms/step - loss: 0.4347 - val_loss: 0.2433

Epoch 2/8

5/5 [==============================] - 0s 67ms/step - loss: 0.1994 - val_loss: 0.1239

Epoch 3/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1266 - val_loss: 0.1119

Epoch 4/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1237 - val_loss: 0.0857

Epoch 5/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1156 - val_loss: 0.1002

Epoch 6/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1092 - val_loss: 0.0965

Epoch 7/8

5/5 [==============================] - 0s 88ms/step - loss: 0.1104 - val_loss: 0.0855

Epoch 8/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1072 - val_loss: 0.0893

Epoch 1/8

5/5 [==============================] - 4s 236ms/step - loss: 0.4672 - val_loss: 0.3460

Epoch 2/8

5/5 [==============================] - 0s 73ms/step - loss: 0.2682 - val_loss: 0.2080

Epoch 3/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1880 - val_loss: 0.1477

Epoch 4/8

5/5 [==============================] - 0s 91ms/step - loss: 0.1397 - val_loss: 0.1021

Epoch 5/8

5/5 [==============================] - 0s 99ms/step - loss: 0.1178 - val_loss: 0.0896

Epoch 6/8

5/5 [==============================] - 0s 85ms/step - loss: 0.1164 - val_loss: 0.0922

Epoch 7/8

5/5 [==============================] - 0s 102ms/step - loss: 0.1106 - val_loss: 0.0891

Epoch 8/8

5/5 [==============================] - 0s 85ms/step - loss: 0.1082 - val_loss: 0.0941

Epoch 1/8

5/5 [==============================] - 4s 260ms/step - loss: 0.4650 - val_loss: 0.3402

Epoch 2/8

5/5 [==============================] - 0s 74ms/step - loss: 0.2561 - val_loss: 0.2101

Epoch 3/8

5/5 [==============================] - 0s 81ms/step - loss: 0.1868 - val_loss: 0.1034

Epoch 4/8

5/5 [==============================] - 0s 81ms/step - loss: 0.1276 - val_loss: 0.0948

Epoch 5/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1246 - val_loss: 0.0955

Epoch 6/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1088 - val_loss: 0.0968

Epoch 7/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1086 - val_loss: 0.0899

Epoch 8/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1077 - val_loss: 0.0859

Epoch 1/8

5/5 [==============================] - 4s 232ms/step - loss: 0.4851 - val_loss: 0.2090

Epoch 2/8

5/5 [==============================] - 0s 72ms/step - loss: 0.1803 - val_loss: 0.1379

Epoch 3/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1515 - val_loss: 0.0933

Epoch 4/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1217 - val_loss: 0.1008

Epoch 5/8

5/5 [==============================] - 0s 82ms/step - loss: 0.1137 - val_loss: 0.0982

Epoch 6/8

5/5 [==============================] - 0s 84ms/step - loss: 0.1094 - val_loss: 0.0995

Epoch 7/8

5/5 [==============================] - 0s 82ms/step - loss: 0.1106 - val_loss: 0.0870

Epoch 8/8

5/5 [==============================] - 0s 82ms/step - loss: 0.1071 - val_loss: 0.0898

Epoch 1/8

5/5 [==============================] - 3s 247ms/step - loss: 0.5362 - val_loss: 0.3065

Epoch 2/8

5/5 [==============================] - 0s 73ms/step - loss: 0.2498 - val_loss: 0.1286

Epoch 3/8

5/5 [==============================] - 0s 81ms/step - loss: 0.1349 - val_loss: 0.1222

Epoch 4/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1174 - val_loss: 0.0950

Epoch 5/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1112 - val_loss: 0.0963

Epoch 6/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1128 - val_loss: 0.0901

Epoch 7/8

5/5 [==============================] - 0s 82ms/step - loss: 0.1057 - val_loss: 0.0920

Epoch 8/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1072 - val_loss: 0.0873

Epoch 1/8

5/5 [==============================] - 3s 226ms/step - loss: 0.3716 - val_loss: 0.1545

Epoch 2/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1517 - val_loss: 0.0954

Epoch 3/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1158 - val_loss: 0.1110

Epoch 4/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1160 - val_loss: 0.0982

Epoch 5/8

5/5 [==============================] - 0s 90ms/step - loss: 0.1128 - val_loss: 0.0883

Epoch 6/8

5/5 [==============================] - 1s 112ms/step - loss: 0.1098 - val_loss: 0.0931

Epoch 7/8

5/5 [==============================] - 0s 94ms/step - loss: 0.1102 - val_loss: 0.0879

Epoch 8/8

5/5 [==============================] - 0s 89ms/step - loss: 0.1084 - val_loss: 0.0883

Epoch 1/8

5/5 [==============================] - 5s 282ms/step - loss: 0.4775 - val_loss: 0.2264

Epoch 2/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1833 - val_loss: 0.1396

Epoch 3/8

5/5 [==============================] - 0s 73ms/step - loss: 0.1232 - val_loss: 0.1239

Epoch 4/8

5/5 [==============================] - 0s 75ms/step - loss: 0.1276 - val_loss: 0.0917

Epoch 5/8

5/5 [==============================] - 0s 83ms/step - loss: 0.1123 - val_loss: 0.0958

Epoch 6/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1100 - val_loss: 0.0873

Epoch 7/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1078 - val_loss: 0.0877

Epoch 8/8

5/5 [==============================] - 0s 80ms/step - loss: 0.1061 - val_loss: 0.0881

Epoch 1/8

5/5 [==============================] - 5s 294ms/step - loss: 0.4750 - val_loss: 0.3055

Epoch 2/8

5/5 [==============================] - 0s 67ms/step - loss: 0.2100 - val_loss: 0.1387

Epoch 3/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1286 - val_loss: 0.1087

Epoch 4/8

5/5 [==============================] - 0s 82ms/step - loss: 0.1174 - val_loss: 0.0927

Epoch 5/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1140 - val_loss: 0.0927

Epoch 6/8

5/5 [==============================] - 0s 77ms/step - loss: 0.1076 - val_loss: 0.0908

Epoch 7/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1094 - val_loss: 0.0899

Epoch 8/8

5/5 [==============================] - 0s 78ms/step - loss: 0.1072 - val_loss: 0.0885

Epoch 1/8

5/5 [==============================] - 5s 285ms/step - loss: 0.4185 - val_loss: 0.2925

Epoch 2/8

5/5 [==============================] - 0s 62ms/step - loss: 0.2248 - val_loss: 0.1906

Epoch 3/8

5/5 [==============================] - 0s 68ms/step - loss: 0.1634 - val_loss: 0.1329

Epoch 4/8

5/5 [==============================] - 0s 76ms/step - loss: 0.1273 - val_loss: 0.1117

Epoch 5/8

5/5 [==============================] - 0s 79ms/step - loss: 0.1206 - val_loss: 0.0983

Epoch 6/8

5/5 [==============================] - 0s 74ms/step - loss: 0.1089 - val_loss: 0.0910

Epoch 7/8